Monitor2

The second monitoring system/framework in the New World, monitor2, is implemented on the server https://nw-syd-monitor2.cse.unsw.edu.au/. Unlike monitor1 it does not use Nagios and does not generate any alarms or warnings.

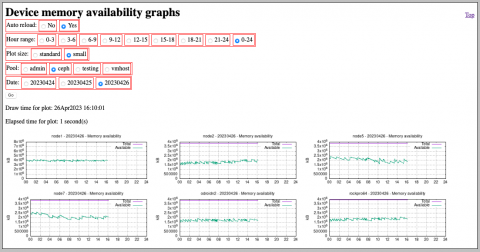

Instead, monitor2 collects and graphs data sampled from various servers. It is designed to implement a simple but flexible way of collecting data from these hosts, storing that data and graphing it, regardless of the data sources. It uses SNMP to collect data samples from the monitored hosts because SNMP operations only lightly load the host compared to, say, using SSH to log in to and query the host. Where the standard SNMP MIBs don't define the desired data, SNMP is extended with external scripts (currently written in bash for ease of portability and maintenance) to provide the required samples.

As of the date of writing, monitor2 supports:

- Disk activity (bytes read and written per local disk and partition)

- Network interface traffic (bytes read and written per network interface)

- CPU usage (percentage) and load average

- Memory usage (RAM)

- Device temperature(s)

- Logged-on user count

Monitored hosts only require:

- That the abovementioned extension scripts be copied into place (see file locations below),

- That a custom

snmpd.confbe copied into place, and - That

snmpdbe installed and started/run viasystemd.

Monitored hosts are also organised into "plot pools", groups of hosts which presumably share some characteristic, and which are always plotted together on the same page. E.g., "vlab", "storecomp" (storage/compute cluster) or "kora" (kora lab workstation). The first time data is plotted via the web interface the plot pool defaults to the DEFAULTPLOTPOOL setting in /etc/monitor2.conf (see below).

Notes about SNMP on Debian

By default, the full suite of SNMP MIBs is 'not installed when SNMP packages are installed on their own. Instead, you need to install the snmp-mibs-downloader package which then runs /usr/bin/download-mibs as post-install. Applicable only to the monitoring server.

File locations

monitor2

The monitor2 server (see above):

- Runs the various scripts used to collect data from the monitored hosts via SNMP, and also

- Runs an Apache2 web server which, via CGI scripts written in

bash(also aided and abetted bygnuplot, graphs the collected data for user consumption.

| monitor2 files and directories | Description |

|---|---|

/etc/monitor2.conf

|

Site-specific configuration: top-level directory location, etc. (see below) |

/etc/datacollectpoll.conf

|

Host sampling configuration file (see below) |

/etc/apache2/apache2.conf

|

Custom Apache2 configuration. Does not include any site, module or configuration files from the default Apache2 configuration directories - i.e., it's all done here! |

/etc/systemd/system/datacollectpoll.service

|

systemd service file used to control our data collection service (see below)

|

/var/samples/monitor2

|

Top level directory under which data for sampled/graphed hosts are collected in plot pool-specific directories |

/usr/local/infrastructure/monitor2/cgi-bin

|

Directory containing bash CGI scripts for each graphed data type. They use a common library to display pages with similar-looking controls at the top and graphs underneath

|

/usr/local/infrastructure/monitor2/plot-bin

|

Contains commonx.sh which is a set of bash functions used to give a common look-and-feel to the graph pages. source'd by the CGI scripts

|

/usr/local/infrastructure/monitor2/html

|

Static HTML pages, including index.html which contains links to the CGI scripts

|

/usr/local/infrastructure/monitor2/getbin

|

Scripts to sample particular data types |

/usr/local/infrastructure/monitor2/datacollectpoll

|

Directory containing the datacollectpoll Tcl script (run by systemd)

|

Monitored hosts

As noted above, hosts from which data is collected need only run snmpd and have the extension scripts installed. This simplicity is reflected in the shortness of the table below.

| Files and directories on the monitored hosts | Description |

|---|---|

/etc/snmp/snmpd.conf

|

Configuration file for the SNMP daemon running on the host. It contains the community name plus it lists the scripts used to extend the range of data the daemon can provide |

/usr/local/snmpd_extend

|

Directory containing the extension scripts referred to by snmpd.conf

|

monitor2.conf

Configuration file used to set environment variables used by the datacollectpoll script (and same-named systemd service). See datacollectpoll.service below.

SLEEPTIME=25 SAMPLERETRIES=3 SAMPLETIMEOUT=2 KEEP_SAMPLES_DAYS=28 SNMPCOMMUNITY="csereader" DEFAULTPLOTPOOL="vlab" DATADIR="/var/samples/monitor2" DATACOLLECTPOLLCONF="/etc/datacollect.conf" |

| Environment variable | Description |

|---|---|

| SLEEPTIME | ? |

| SAMPLERETRIES | Passed to snmpget and snmpwalk commands to set number of retry attempts to make while reading data samples from a monitored host

|

| SAMPLETIMOUT | Ditto of the above, but the timeout before retrying |

| KEEP_SAMPLES_DAYS | Number of days to keep data samples. Used by the cron job ~monitor2/getbin/delete_old_samples

|

| DEFAULTPLOTPOOL | The initial plot pool selected when data is plotted via the web interface |

| DATADIR | Top-level directory where data samples are stored by the data collection scripts, and read from by the CGI page-plotting scripts |

| DATACOLLECTPOLLCONF | Location and name of the configuration file for datacollectpoll, the immortal script run by datacollectpoll.service

|

datacollectpoll.service

Location: /etc/systemd/system/datacollectpoll.service

[Unit] Description=Data Collect Poll daemon for fleet monitoring After=network.target [Service] User=monitor2 Group=monitor2 EnvironmentFile=/etc/monitor2.conf ExecStart=/usr/local/infrastructure/datacollectpoll/datacollectpoll [Install] WantedBy=multi-user.target |

Note: the "monitor2" user and group need to be created on the monitor2 server using the ID numbers (1000/1000) specified in the cfengine configuration. See /var/lib/cfengine3/masterfiles/monitorconf.inc (on the cfengine hub).

datacollectpoll

Immortal Tcl script (i.e., never intentionally dies) managed and run by systemd. It runs commands at intervals listed in datacollectpoll.conf to collect data samples and stores them in plain-text files in the samples directory.

It reads the list of poll commands to run, which includes host names and the plot pools to which they belong, and then proceeds to run these commands at specified intervals to collect the data. The scripts themselves are responsible for doing the actual polling and data storage - datacollectpoll simply runs the commands.

datacollectpoll.conf is automatically re-read each time it changes so datacollectpoll does not need to be restarted.

If a command fails (returns an exit code other than zero) datacollectpoll backs off before trying again.

samples

Directory and subdirectories with plain-text files containing collections of data samples named by date, data type and host.

More specifically, each sample file name is formatted as follows:

YYYYMMDD-<host>-<datatype>.dat

Where:

- YYYYMMDD is the date. There's one file per 24-hour day

- <host> is the name of the monitored host

- <datatype> is the type of data - e.g., "netif" (network interface byte counts), "usercount" (user count, especially for VLAB and login servers), etc.

Each file is a plain text file consisting of a series of lines containing space-separated values:

- First field is the sample time since midnight in seconds

- Subsequent fields are typically numerical values (may be floating point). Where data is for potentially multiple attached peripherals, such as disk drives or network interfaces, the fields may consist of groups of peripheral names and their data.

samples directory structure (see also /etc/monitor2.conf)

root@nw-syd-monitor2:# find /var/samples/monitor2 -type d -ls 277404 4 drwxr-xr-x 7 monitor2 monitor2 4096 Mar 7 13:52 /var/samples/monitor2 280270 68 drwxr-xr-x 2 monitor2 monitor2 65536 Apr 27 00:00 /var/samples/monitor2/admin 277405 412 drwxr-xr-x 2 monitor2 monitor2 417792 Apr 27 00:01 /var/samples/monitor2/vlab 393252 12 drwxr-xr-x 2 monitor2 monitor2 12288 Apr 27 00:00 /var/samples/monitor2/homeserver 278614 356 drwxr-xr-x 2 monitor2 monitor2 360448 Apr 27 00:00 /var/samples/monitor2/kora 393224 64 drwxr-xr-x 2 monitor2 monitor2 61440 Apr 27 00:00 /var/samples/monitor2/storecomp root@nw-syd-monitor2:#

sample data file extracts

Disk activity

11 nvme0n1 1989867008 2570418688 1 0 0 nvme0n1p1 1966918656 2570417152 1 0 0 nvme0n1p14 4218880 0 0 0 0 nvme0n1p15 10947072 1536 0 0 0 nvme1n1 2288322560 3538283008 1 1 1

41 nvme0n1 1989867008 2573756928 0 0 0 nvme0n1p1 1966918656 2573755392 0 0 0 nvme0n1p14 4218880 0 0 0 0 nvme0n1p15 10947072 1536 0 0 0 nvme1n1 2288322560 3540136448 1 1 1

71 nvme0n1 1989867008 2577824256 0 0 0 nvme0n1p1 1966918656 2577822720 0 0 0 nvme0n1p14 4218880 0 0 0 0 nvme0n1p15 10947072 1536 0 0 0 nvme1n1 2288322560 3541457920 0 1 1

101 nvme0n1 1989867008 2581551616 0 0 0 nvme0n1p1 1966918656 2581550080 0 0 0 nvme0n1p14 4218880 0 0 0 0 nvme0n1p15 10947072 1536 0 0 0 nvme1n1 2293008384 3543464448 0 1 1

131 nvme0n1 1989867008 2586585600 0 0 0 nvme0n1p1 1966918656 2586584064 0 0 0 nvme0n1p14 4218880 0 0 0 0 nvme0n1p15 10947072 1536 0 0 0 nvme1n1 2295695360 3553381376 1 1 1

161 nvme0n1 1989944832 2596915712 0 0 0 nvme0n1p1 1966996480 2596914176 0 0 0 nvme0n1p14 4218880 0 0 0 0 nvme0n1p15 10947072 1536 0 0 0 nvme1n1 2296547328 3557158912 0 1 1

191 nvme0n1 1989944832 2598357504 0 0 0 nvme0n1p1 1966996480 2598355968 0 0 0 nvme0n1p14 4218880 0 0 0 0 nvme0n1p15 10947072 1536 0 0 0 nvme1n1 2296928256 3558146560 0 1 1

222 nvme0n1 1989944832 2600430080 0 0 0 nvme0n1p1 1966996480 2600428544 0 0 0 nvme0n1p14 4218880 0 0 0 0 nvme0n1p15 10947072 1536 0 0 0 nvme1n1 2296928256 3571383296 0 1 1

252 nvme0n1 1989944832 2601798144 0 0 0 nvme0n1p1 1966996480 2601796608 0 0 0 nvme0n1p14 4218880 0 0 0 0 nvme0n1p15 10947072 1536 0 0 0 nvme1n1 2296928256 3588140544 1 1 1

282 nvme0n1 1989944832 2602273280 0 0 0 nvme0n1p1 1966996480 2602271744 0 0 0 nvme0n1p14 4218880 0 0 0 0 nvme0n1p15 10947072 1536 0 0 0 nvme1n1 2296969216 3607321600 1 1 1

Field #1 is the number of seconds since midnight Fields #2 thru #7 (repeats for each device) are:

- Device name

- Cumulative read byte count

- Cumulative write byte count

- ?

- ?

- ?

Note that in this case the host is ARM-based (not Intel) and this is reflected in the device names (i.e., they don't look like "sdX".

User count

root@nw-syd-monitor2:# head /var/samples/monitor2/vlab/20230401-nw-syd-vxdb-usercount.dat 26 26 146 25 266 25 386 28 506 29 626 28 746 28 866 28 986 28 1106 29 root@nw-syd-monitor2:#

Much simpler this time. Field #1 is the seconds since midnight and field #2 is the instantaneous user count at the time of the sample.

datacollectpoll.conf

Format:

- Blank lines are ignored.

- '#' to end of line is a comment

- First space-separated field on the line is the command to run. This file must exist at the time the file is loaded

- Subsequent space-separated fields are optional arguments passed to the aforementioned command. Although optional format-wise, the existing scripts take two arguments:

- The poll group

- The host to sample

- An optional ';' (semicolon) followed by an interval in seconds changes the time between samples from the default 30 to the specified interval

Example:

/usr/local/infrastructure/monitor2/getbin/get_load_stats admin nw-syd-cfengine-hub ; 30 /usr/local/infrastructure/monitor2/getbin/get_disk_stats admin nw-syd-cfengine-hub ; 30 /usr/local/infrastructure/monitor2/getbin/get_netif_stats admin nw-syd-cfengine-hub ; 30 /usr/local/infrastructure/monitor2/getbin/get_load_stats vlab nw-syd-armvx1 ; 30 /usr/local/infrastructure/monitor2/getbin/get_disk_stats vlab nw-syd-armvx1 ; 30 /usr/local/infrastructure/monitor2/getbin/get_netif_stats vlab nw-syd-armvx1 ; 30 /usr/local/infrastructure/monitor2/getbin/get_usercount_stats vlab nw-syd-armvx1 ; 120 /usr/local/infrastructure/monitor2/getbin/get_load_stats vlab nw-syd-vxdb ; 30 /usr/local/infrastructure/monitor2/getbin/get_disk_stats vlab nw-syd-vxdb ; 30 /usr/local/infrastructure/monitor2/getbin/get_netif_stats vlab nw-syd-vxdb ; 30 /usr/local/infrastructure/monitor2/getbin/get_usercount_stats vlab nw-syd-vxdb ; 120 ... |

Deleting old data samples

Data sample files are cleaned out by the getbin/delete_old_samples cronjob run nightly by the monitor2 user.

The KEEP_SAMPLES_DAYS setting in /etc/monitor2.conf determines the number of days the data is kept.