Proposed home directory architecture, configuration and management

Preamble

The way it was

In the Old World the majority of the CSE home directories were stored on six main NFS servers, each server having local RAID disk storage for the home directories.

These servers had names such as ravel, kamen, etc. Home directories were located in ext3/ext4/xfs file systems created on local logical block devices and mounted under /export (e.g., /export/kamen/1, /export/ravel/2, etc.) from whence it was exported via the NFS to lab computers, VLAB servers and login servers. For ease of management and speed of fsck on reboot, each physical server had its available disk storage divided into multiple logical block devices, each of less than 1TB.

User home directory paths would be hard-coded to be on a particular file system on a particular server, e.g., /export/kamen/1/plinich.

This arrangement meant that a problem on a particular server could potentially affect 1/6th of all accounts until such time as the server itself was fixed, or until the affected home directories were restored from backup to another server and the users' home directory locations were updated. This arrangement also meant that home directories were stored in one geographic location in one of six physical servers.

The way it might be

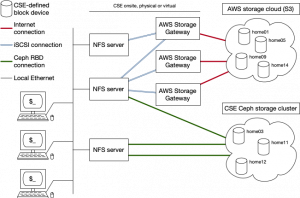

The proposed architecture disassociates home directories from specific physical/virtual NFS servers and places home directories in virtual hard disk block devices which are actually stored either in the Amazon AWS S3 cloud, or in CSE's distributed Ceph storage cluster.

The key ideas are:

- Home directories are stored on block devices defined and existing either in Amazon's AWS S3 storage, or in CSE's own Ceph storage cluster.

- NFS servers may be physical or virtual and DO NOT have local storage for home directories. They do, however, have their own IP addresses which have NO ASSOCIATION with any of the home directory block devices. Ihe IP address of an NFS server is used solely to manage the NFS server itself and has no role in making available the home directory NFS exports.

- Home directory storage is attached to the particular NFS servers which export it via either iSCSI, in the case of AWS, or as Ceph RADOS Block Devices (RBD). Both of these attachment methods use TCP/IP and allow the home directory storage to then be mounted under Linux as a normal ext3/ext4/xfs file system.

- Each home directory storage block device is associated with a same-named DNS entry with its own IP address. E.g., "home02" would be associated with a DNS entry of "home02.cse.unsw.edu.au" or "home02.cseunsw.site" with a static IP address of, say, 129.94.242.ABC. Only ONE (and only one) home directory block storage device is associated with a single IP address.

Importantly, the NFS servers have no implicit or explicit association with any particular home directory stores. Instead, an NFS server can export any number of arbitrary home directory file systems[1]

The procedure for making a home directory's block device contents available via NFS

- Select the particular home directory block device in either AWS or Ceph. E.g., "homeXX"

- If homeXX is in AWS:

- Associate the "homeXX" block device in AWS with one of the on-site AWS Storage Gateways using the AWS web console, and make it available to local hosts via iSCSI. See ???

- Attach the homeXX block device via iSCSI to the NFS server you want to export that block device's file system.

- If homeXX is in the Ceph storage cluster:

- Map the home directory RBD into the NFS server.

- If homeXX is in AWS:

mountthe attached device on the NFS server as/export/homeXX/1[2].- Add an entry to

/etc/exportsfor the home directory file system and then runexportfs -rato tell the NFS server deamon to make the file system available. - Find the IP address associated with the home directiory file system (homeXX.cse.unsw.edu.au, etc.) and add this address to the server's network interface with

ip addr add 129.94.242.ABC/24 dev eth0(check this!)

Most steps above can be automated/scripted.

Repeat the above for each file system.

At this point, NFS clients should be able to mount the home directory file system(s) like this (or equivalent by the automounter):

# mount -t nfs homeXX:/export/homeXX/1 /mnt

AWS Storage Gateway

The AWS Storage Gateway is an appliance that is installed on-site on either a virtual or physical server. It provides local iSCSI access to block devices defined in AWS' S3 storage. Using local SSD (ideally) storage, the Storage Gateway provides fast local access to cached data which is replicated to S3.

When the appliance is installed, the user/owner does basic configuration on the server's console to give it an IP address and to authenticate it to AWS, and from then on AWS takes over management and configuration (the latter of which is done via the AWS web console).

The screen snapshots to the right show the AWS console configuration interface for the Storage Gateway itself, and the volumes made available via iSCSI.

Note that the last snapshot, under "Connecting your volume" includes a link entitled "Connecting your volumes to Linux".

Note also that it is possible to move volumes from one AWS Storage Gateway to another wihtout loss of data. This is most useful in case a Storage Gateway, or its underlying hardware, fails because all data is stored or replicated in S3 and can be reloaded therefrom to a new Storage Gateway's cache.

Notes

- ↑ Constrained, of course, by the limitation that only ONE NFS server at a time can attach a storage block device and, consequently, any single home directory file system can be exported by only one NFS server at a time. This constraint has nothing to do with the architecture being discussed but is due to a file system being mountable by only one host at a time without corruption occurring.

- ↑ This will always be mounted under "…/1" to maintain compatibility with Old World heuristics.