Storage and compute cluster: Difference between revisions

Jump to navigation

Jump to search

mNo edit summary |

No edit summary |

||

| Line 23: | Line 23: | ||

Of course, if/when the cluster is decentralised, the networking will have to be revisited according to the networking available at the additional data centres. | Of course, if/when the cluster is decentralised, the networking will have to be revisited according to the networking available at the additional data centres. | ||

== Installing and configuring the physical servers to run Linux and Ceph == | |||

Servers are Dell. | |||

# On the RAID controller: | |||

#* Configure two disks for the boot device as RAID1 (mirrored) | |||

#* Configure the remaining data store disks as RAID0 with '''one''' single component disk each | |||

# Use eth0 to do a network install of [[Debian]] Bullseye selecting: | |||

#* Static network addressing | |||

#* SSH Server only | |||

#* Everything installed in the one partition | |||

Revision as of 10:47, 24 October 2022

The storage and compute cluster is intended as a replacement for CSE's VMWare and SAN infrastructure. Its primary functions are:

- A resilient, redundant storage cluster consisting of multiple cheap[-ish] rack-mounted storage nodes running Linux and Ceph using multiple local SSD drives, and

- Multiple compute nodes running Linux and QEMU/KVM acting as both secondary storage nodes and virtual machine hosts. These compute nodes are similar in CPU count and RAM as CSE's discrete login/VLAB servers.

Important implementation considerations

- Data in the storage cluster is replicated in real-time across multiple hosts so that the failure of one or more nodes will not cause loss of data AND when any such failure occurs the cluster software (Ceph) will automatically rebuild instances of lost replicas on remaining nodes.

- Similarly, compute nodes are all similarly configured in terms of networking, CPU count and RAM and should any compute node fail, the virtual machines running on it can be migrated or restarted on another compute node without loss of functionality.

- While initially co-located in ther same data centre (K17), the intention is that storage and compute nodes can be distributed across multiple data centres (especially having two located at the UNSW Kensington campus) so a data centre failure, rather than just the failure of a single host, does not preclude restoring full operation of all hosted services.

Concept

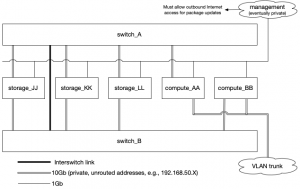

The diagram at the right shows the basic concept of the cluster.

- The primary storage and compute nodes have 10Gb network interfaces used to access and maintain the data store.

- There are two network switches providing redundancy for the storage node network traffic and ensuring that at least one compute node will have access to the data store in case of switch failure.

- Management of the cluster happens via a separate subnetwork to the data store's own traffic network.

Additional and unlimited storage and compute nodes can be added.

Of course, if/when the cluster is decentralised, the networking will have to be revisited according to the networking available at the additional data centres.

Installing and configuring the physical servers to run Linux and Ceph

Servers are Dell.

- On the RAID controller:

- Configure two disks for the boot device as RAID1 (mirrored)

- Configure the remaining data store disks as RAID0 with one single component disk each

- Use eth0 to do a network install of Debian Bullseye selecting:

- Static network addressing

- SSH Server only

- Everything installed in the one partition