Storage and compute cluster: Difference between revisions

mNo edit summary |

|||

| (47 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

The '''storage and compute cluster''' is intended as a replacement for CSE's [[ | {|style="float: right;"| | ||

|[[File:CSE_storage_and_compute_cluster_storage_and_virtual_machines_-_October_2023.pdf]] | |||

|} | |||

The '''storage and compute cluster''' is intended as a replacement for CSE's [[VMware]] and SAN infrastructure. Its primary functions are: | |||

# A resilient, redundant storage cluster consisting of multiple cheap<nowiki>[-ish]</nowiki> rack-mounted storage nodes running Linux and [[Ceph]] using multiple local SSD drives, and | # A resilient, redundant storage cluster consisting of multiple cheap<nowiki>[-ish]</nowiki> rack-mounted storage nodes running Linux and [[Ceph]] using multiple local SSD drives, and | ||

| Line 8: | Line 12: | ||

# Data in the storage cluster is replicated in real-time across multiple hosts so that the failure of one or more nodes will not cause loss of data AND when any such failure occurs the cluster software (Ceph) will automatically rebuild instances of lost replicas on remaining nodes. | # Data in the storage cluster is replicated in real-time across multiple hosts so that the failure of one or more nodes will not cause loss of data AND when any such failure occurs the cluster software (Ceph) will automatically rebuild instances of lost replicas on remaining nodes. | ||

# Similarly, compute nodes are all similarly configured in terms of networking, CPU count and RAM and should any compute node fail, the virtual machines running on it can be migrated or restarted on another compute node without loss of functionality. | # Similarly, compute nodes are all similarly configured in terms of networking, CPU count and RAM and should any compute node fail, the virtual machines running on it can be migrated or restarted on another compute node without loss of functionality. | ||

# While initially co-located in | # While initially co-located in the same data centre (K17), the intention is that storage and compute nodes can be distributed across multiple data centres (especially having two located at the UNSW Kensington campus) so that a data centre failure, rather than just the failure of a single host, does not preclude restoring full operation of all hosted services. | ||

== Concept == | == Concept == | ||

| Line 23: | Line 27: | ||

Of course, if/when the cluster is decentralised, the networking will have to be revisited according to the networking available at the additional data centres. | Of course, if/when the cluster is decentralised, the networking will have to be revisited according to the networking available at the additional data centres. | ||

=== What Ceph provides === | |||

Fundamentally, Ceph provides a redundant, distributed data store on top of physical servers running Linux. Ceph itself manages replication, patrol reads/scrubbing and maintaining redundancy ensuring data remains automatically and transparently available in the face of disk, host and/or site failures. | |||

The data is presented for use primarily, but not exclusively, as network-accessible raw block devices (RADOS Block Devices or RBD's) for use as filesystem volumes or boot devices (the latter especially by virtual machines); and as mounted network file systems (CephFS) similar to [[NFS]]. In both cases the data backing the device or file system is replicated across the cluster. | |||

Each storage and compute node typically runs one or more Ceph daemons while other servers accessing the data stored in the cluster will typically only have the Ceph libraries and utilities installed. | |||

The Ceph daemons are: | |||

# mon - monitor daemon - multiple instances across multiple nodes maintaining status and raw data replica location information. Ceph uses a quorum of mon's to avoid split brain issues and thus the number of mon's should be an odd number greater than or equal to three. | |||

# mgr - manager daemon - one required to assist the mon's. Thus, it's useful to have two in case one dies. | |||

# mds - a metadata server for CephFS (such as file permission bits, ownership, time stamps, etc.). The more the merrier and, obviously, two or more is a good thing. | |||

# osd - the object store daemon. One per physical disk so there can be more than one on a physical host. Handles maintaining, replicating, validating and serving the data on the disk. OSD's talk to themselves a lot. | |||

=== What Ceph does not provide === | |||

Ceph tends to live in the here-and-now. Backups of the data stored in the cluster needs to managed separately. This includes disk images, particularly of virtual machines. | |||

Ceph does support snapshots, but because Ceph has no awareness of the file systems on virtual disks just taking a Ceph snapshot of an in-use disk image is likely to create an inconsistent image that will need, at best, a file system check (<code>fsck</code>) to mount properly or, at worst, will simply be unusable. | |||

Thus, there are two possible backup strategies: | |||

# Get the virtual machines using the Ceph disk images to back up the disk data themselves (e.g., <code>rsync</code>), or | |||

# Shut down the virtual machines briefly, create a Ceph snapshot and then reboot the virtual machines. | |||

Note that in the case of a catastrophic failure of Ceph, loss of too many nodes or sites, or an administrator doing something foolish (like deleting a disk image including its snapshots), it is wise to consider disaster recovery options that don't require a functional Ceph cluster to instantiate. | |||

See also <code>man fsfreeze</code>. | |||

=== What QEMU/KVM provides === | |||

# Machine virtualisation | |||

# Limited virtual networking which supplemented by the local host's own networking/bridging/firewalling | |||

See: | |||

* [https://www.qemu.org/ QEMU website] | |||

* [https://en.wikipedia.org/wiki/QEMU QEMU at Wikipedia] | |||

* <code>man:qemu-system-x86_64</code> | |||

* <code>man:qemu-img</code> | |||

== Installing and configuring the physical servers to run Linux and Ceph == | |||

Servers are Dell. | |||

# On the RAID controller: | |||

#* Configure two disks for the boot device as RAID1 (mirrored) | |||

#* Configure the remaining data store disks as RAID0 with '''one''' single component disk each | |||

# Use eth0 to do a network install of [[Debian]] Bullseye selecting: | |||

#* Static network addressing | |||

#* SSH Server only | |||

#* Everything installed in the one partition | |||

#* Configure timezone, keyboard, etc. | |||

# Reboot into installed OS | |||

# Fix/enable root login | |||

# Change <code>sshd_config</code> to include | |||

#* <code>UsePAM no</code> | |||

#* <code>PasswordAuthentication no</code> | |||

# Modify <code>/etc/default/grub</code>: | |||

#* Change <code>GRUB_CMDLINE_LINUX_DEFAULT</code> from: | |||

#* <code>"quiet"</code> | |||

#* to: | |||

#* <code>"quiet selinux=0 audit=0 ipv6.disable=1"</code> | |||

#* Then run <code>grub-mkconfig -o /boot/grub/grub.cfg </code> and reboot | |||

# Install packages: | |||

#* <code>apt-get install man-db lsof strace tcpdump iptables-persistent rsync psmisc</code> | |||

#* <code>apt-get install python2.7-minimal</code> (for utilities in <code>/root/bin</code>) | |||

# Ensure <code>unattended upgrades</code> are installed and enabled (<code>dpkg-reconfigure unattended-upgrades</code>) | |||

Install the Ceph software packages: | |||

Refer to the manual installation procedures at [https://docs.ceph.com/en/quincy/install/index_manual/#install-manual Installation (Manual)]. | |||

# <span style="text-decoration: line-through;"><code>wget -q -O- 'https://download.ceph.com/keys/release.asc' | apt-key add -</code></span> | |||

# <span style="text-decoration: line-through;"><code>apt-add-repository 'deb https://download.ceph.com/debian-quincy/ bullseye main'</code></span> | |||

# <code>apt-get install ceph</code> | |||

<span style="color:red;">When first creating a Ceph cluster you need to the following ONCE. Once the cluster is running no further bootstrapping is required. See further down for how to ADD a mon to an already-running cluster.</span> | |||

# Perform Monitor Bootstrapping ([https://docs.ceph.com/en/quincy/install/manual-deployment/#monitor-bootstrapping Monitor bootstrapping]) | |||

# You may need to run <code>chown -R ceph:ceph /var/lib/ceph/mon/ceph-<host></code> to get the mon started the first time. | |||

# <code>systemctl enable ceph-mon@<host></code> | |||

The initial <code>/etc/ceph/ceph.conf</code> file will look a bit like this: | |||

<pre>[global] | |||

fsid = db5b6a5a-1080-46d2-974a-80fe8274c8ba | |||

mon initial members = storage00 | |||

mon host = 129.94.242.95 | |||

auth cluster required = cephx | |||

auth service required = cephx | |||

auth client required = cephx | |||

[mon.storage00] | |||

host = storage00 | |||

mon addr = 129.94.242.95</pre> | |||

Set up a mgr daemon: | |||

See also [https://docs.ceph.com/en/quincy/mgr/administrator/#mgr-administrator-guide Ceph-mgr administration guide]. | |||

# <code>mkdir /var/lib/ceph/mgr/ceph-<host></code> | |||

# <code> ceph auth get-or-create mgr.<host> mon 'allow profile mgr' osd 'allow *' mds 'allow *' > /var/lib/ceph/mgr/ceph-<host>/keyring</code> | |||

# <code> chown -R ceph:ceph /var/lib/ceph/mgr/ceph-<host></code> | |||

# <code> systemctl start ceph-mgr@<host></code> | |||

# <code> systemctl enable ceph-mgr@<host></code> | |||

Some run time configuration related to running mixed-version Ceph environments (which we hopefully don't do): | |||

# <code>ceph config set mon mon_warn_on_insecure_global_id_reclaim true</code> | |||

# <code>ceph config set mon mon_warn_on_insecure_global_id_reclaim_allowed true</code> | |||

# <code>ceph config set mon auth_allow_insecure_global_id_reclaim false</code> | |||

# <code>ceph mon enable-msgr2</code> | |||

Check Ceph cluster-of-one-node status: | |||

# <code>ceph status</code> | |||

== Ceph software and configuration common across all storage and compute nodes == | |||

In a disaster situation, the storage and compute nodes need to be able to run without depending on any additional network services. Thus site network configuration, necessary utility scripts and any other important files are duplicated on all nodes. Principally these are: | |||

{|class="firstcolfixed" | |||

!File name | |||

!Description | |||

!Action to take manually when changed | |||

|- | |||

|/etc/hosts | |||

|Node network names and IP addresses | |||

|- | |||

|/etc/ceph/ceph.conf | |||

|Main Ceph configuration file. Used by all Ceph daemons and libraries, and very importantly by the mon daemons | |||

|Restart the mon daemons (one at a time, not all at once! Keep an eye on <code>ceph status</code> while doing so) | |||

|- | |||

|/etc/ceph/ceph.client.admin.keyring | |||

|Access key used by tools and utilities to grant administrative access to the cluster | |||

|- | |||

|/root/bin/ceph_* | |||

|CSG-provided utilities to maintain the cluster | |||

|- | |||

|/etc/iptables/rules.v4 | |||

|iptables/netfilter rules to protect the cluster | |||

|Run <code>/usr/sbin/netfilter-persistent start</code> | |||

|} | |||

== Adding additional storage nodes == | |||

# Follow the steps above for installing and configuring Linuux up to using <code>apt-get</code> to install the Ceph packages. | |||

# Update <code>/root/bin/ceph_distribute_support_files</code> and <code>/etc/hosts</code> on a running node to include the new node. | |||

# On the same already-running node, run <code>/root/bin/ceph_distribute_support_files</code>. | |||

# To add storage, select an unused OSD number and on the new node run: | |||

#* <code>ceph_create_nautilus_osd /dev/<blockdevice> <osdnum></code> | |||

#* <code>systemctl start ceph-osd@<osdnum></code> | |||

#* <code>systemctl enable ceph-osd@<osdnum></code> | |||

== Add additional mon daemons == | |||

See also [https://docs.ceph.com/en/quincy/rados/operations/add-or-rm-mons/ Adding/removing monitors]. | |||

Note: A maximum of one mon and one mgr are allowed per server/node. | |||

<pre># mkdir /var/lib/ceph/mon/ceph-<hostname> | |||

# ceph auth get mon. -o /tmp/keyfile | |||

# ceph mon getmap -o /tmp/monmap | |||

# ceph-mon -i <hostname> --mkfs --monmap /tmp/monmap --keyring /tmp/keyfile | |||

# chown -R ceph:ceph /var/lib/ceph/mon/ceph-<hostname> | |||

# systemctl start ceph-mon@<hostname> | |||

# systemctl enable ceph-mon@<hostname></pre> | |||

== Other Ceph configuration things == | |||

=== Set initial OSD weights. As all disks are the same size, use 1.0 until such time as other sizes are present === | |||

Examples: | |||

# <code>ceph osd crush set osd.0 1.0 host=storage00</code> | |||

# <code>ceph osd crush set osd.1 1.0 host=storage00</code> | |||

# <code>ceph osd crush set osd.2 1.0 host=storage01</code> | |||

# <code>ceph osd crush set osd.3 1.0 host=storage01</code> | |||

# <code>ceph osd crush set osd.4 1.0 host=compute01</code> | |||

# <code>ceph osd crush set osd.5 1.0 host=compute01</code> | |||

=== Set up the crushmap hierarchy to allow space to be allocated on a per-host basis (as opposed to per-OSD) === | |||

<span style="color: red;">Not to be played with lightly. See [https://docs.ceph.com/en/quincy/rados/operations/crush-map/#crush-maps CRUSH maps].</span> | |||

Example commands: | |||

# <code>bin/ceph_show_crushmap</code> | |||

# <code>ceph osd crush move storage00 root=default</code> | |||

# <code>ceph osd crush move storage01 root=default</code> | |||

# <code>ceph osd crush move compute01 root=default</code> | |||

=== Create an RBD pool to used for RBD devices(RADOS Block Devices) === | |||

See: | |||

* [https://docs.ceph.com/en/quincy/rados/operations/pools/ Pools] | |||

* [https://docs.ceph.com/en/quincy/rados/operations/pools/#associate-pool-to-application Associate pool with application] | |||

* [https://docs.ceph.com/en/quincy/rbd/rados-rbd-cmds/#create-a-block-device-pool Create a block device pool] | |||

# <code>ceph osd pool create rbd 128 128</code> | |||

# <code>rbd pool init rbd</code> | |||

=== Add MDS daemons for CephFS on two nodes (so we have two of them in case one fails) === | |||

Like this: | |||

# <code>mkdir -p /var/lib/ceph/mds/ceph-<hostname></code> | |||

# <code>ceph-authtool --create-keyring /var/lib/ceph/mds/ceph-<hostname>/keyring --gen-key -n mds.<hostname></code> | |||

# <code>ceph auth add mds.<hostname> osd "allow rwx" mds "allow *" mon "allow profile mds" -i /var/lib/ceph/mds/ceph-<hostname>/keyring</code> | |||

# <code>chown -R ceph:ceph /var/lib/ceph/mds/ceph-<hostname></code> | |||

# <code>systemctl start ceph-mds@<hostname></code> | |||

# <code>systemctl status ceph-mds@<hostname></code> | |||

Update <code>/etc/ceph/ceph.conf</code> and distribute. | |||

=== Create the transportable "vm" file system where the qemu scripts for each VM will go === | |||

# <code>ceph osd pool create vmfs_data 128 128</code> | |||

# <code>ceph osd pool create vmfs_metadata 128 128</code> | |||

# <code>ceph fs new vm vmfs_metadata vmfs_data</code> | |||

== Building a compute node == | |||

Note: Virtual machines run with their console available via VNC '''only''' via the loopback interface (127.0.0.1). Thus, one must use an SSH tunnel to access the consoles. | |||

* Follow steps outlined above to do a basic setup of the server and install the Ceph software on it. Then, | |||

* Follow steps 1 thru 3 in ''Adding additional storage nodes'' (above). | |||

Run: | |||

# <code>apt-get install qemu-system-x86 qemu-utils qemu-block-extra</code> | |||

# <code>apt-get install bridge-utils</code> | |||

Set up <code>/etc/network/interfaces</code>. Use the following for inspiration on how to set up bridging: | |||

<pre># This file describes the network interfaces available on your system | |||

# and how to activate them. For more information, see interfaces(5). | |||

source /etc/network/interfaces.d/* | |||

# The loopback network interface | |||

auto lo | |||

iface lo inet loopback | |||

# The primary network interface | |||

auto eth0 | |||

iface eth0 inet static | |||

address 129.94.242.146/24 | |||

gateway 129.94.242.1 | |||

dns-nameservers 129.94.242.2 | |||

dns-search cse.unsw.edu.au | |||

# The trunk | |||

auto eth2.380 | |||

iface eth2.380 inet manual | |||

auto eth2.381 | |||

iface eth2.381 inet manual | |||

auto eth2.385 | |||

iface eth2.385 inet manual | |||

auto br380 | |||

iface br380 inet manual | |||

bridge_ports eth2.380 | |||

auto br381 | |||

iface br381 inet manual | |||

bridge_ports eth2.381 | |||

auto br385 | |||

iface br385 inet manual | |||

bridge_ports eth2.385</pre> | |||

=== Configure qemu to allow virtual machines to use bridge devices === | |||

# <code>mkdir /etc/qemu</code> | |||

# <code>echo "allow br380" > /etc/qemu/bridge.conf</code> | |||

# <code>echo "allow br381" >> /etc/qemu/bridge.conf</code> | |||

# <code>echo "allow br385" >> /etc/qemu/bridge.conf</code> | |||

=== <code>/root/bin/ceph_mount_qemu_machines</code> === | |||

<pre>#!/bin/bash | |||

mount -t ceph -o name=admin :/ /usr/local/qemu_machines && df -m | |||

</pre> | |||

See <code>/usr/local/qemu_machines</code>. | |||

=== /usr/local/qemu_machines/common/start_vm</code> === | |||

<pre>#!/bin/bash | |||

cd `dirname "$0"` || exit 1 | |||

if [ "a$MEM" = "a" ]; then MEM="1G"; fi | |||

if [ "a$MAC" = "a" ]; then | |||

MAC="56" | |||

MAC="$MAC:`printf "%02x" $(( $RANDOM & 0xff ))`" | |||

MAC="$MAC:`printf "%02x" $(( $RANDOM & 0xff ))`" | |||

MAC="$MAC:`printf "%02x" $(( $RANDOM & 0xff ))`" | |||

MAC="$MAC:`printf "%02x" $(( $RANDOM & 0xff ))`" | |||

# MAC="$MAC:`printf "%02x" $(( $RANDOM & 0xff ))`" | |||

fi | |||

if [ "a$BOOT" = "a" ]; then BOOT="c"; fi | |||

if [ "a$NICE" = "a" ]; then NICE="0"; fi | |||

if [ "a$CPU" = "a" ]; then CPU="host"; fi | |||

if [ "a$KVM" = "akvm" ]; then KVM="-enable-kvm -cpu $CPU"; else KVM=""; fi | |||

if [ "a$SMP" != "a" ]; then SMP="-smp $SMP"; fi | |||

if [ "a$MACHINE" != "a" ]; then MACHINE="-machine $MACHINE"; else MACHINE=""; fi | |||

if [ "a$NODAEMON" = "a" ]; then DAEMONIZE="-daemonize"; else DAEMONIZE=""; fi | |||

n=0 | |||

DISKS="" | |||

while true; do | |||

eval d=\$DISK$n | |||

if [ "a$d" = "a" ]; then break; fi | |||

if [ "a$DISKS" != "a" ]; then DISKS="${DISKS} "; fi | |||

DISKS="${DISKS}-drive file=$d,if=virtio" | |||

n=$(( $n + 1 )) | |||

done | |||

n=0 | |||

NETIFS="" | |||

while true; do | |||

eval d=\$NETIF$n | |||

if [ "a$d" = "a" ]; then break; fi | |||

if [ "a$NETIFS" != "a" ]; then NETIFS="${NETIFS} "; fi | |||

NETIFS="${NETIFS}-netdev bridge,id=hostnet$n,br=$d -device virtio-net-pci,netdev=hostnet$n,mac=$MAC:`printf "%02x" $n`" | |||

n=$(( $n + 1 )) | |||

done | |||

exec /usr/bin/nice -n $NICE \ | |||

/usr/bin/qemu-system-x86_64 \ | |||

-vnc 127.0.0.1:$NUM \ | |||

$DISKS \ | |||

$MACHINE \ | |||

$KVM \ | |||

$SMP \ | |||

$NETIFS \ | |||

-m $MEM \ | |||

-boot $BOOT \ | |||

-serial none \ | |||

-parallel none \ | |||

$EXTRAARGS \ | |||

$DAEMONIZE | |||

exit</pre> | |||

=== <code>/usr/local/qemu_machines/debianminimal/start_vm</code> === | |||

<pre>#!/bin/bash | |||

#BOOT="d" | |||

#EXTRAARGS="-cdrom /usr/local/qemu_machines/isos/debian-11.5.0-amd64-netinst.iso" | |||

NUM=1 | |||

KVM="kvm" | |||

DISK0="rbd:rbd/debianminimal-disk0,format=rbd" | |||

NETIF0="br385" | |||

#NODAEMON="y" | |||

. /usr/local/qemu_machines/common/start_vm</pre> | |||

== Some info about RADOS Block Devices == | |||

See [https://docs.ceph.com/en/quincy/rbd/ Ceph block device]. | |||

RADOS Block Devices (RBD's) are network block devices created in a Ceph storage cluster which are available as block devices to clients of the cluster, typically to provide block storage -- e.g., boot disks, etc. -- to virtual machines running on hosts in the cluster. | |||

RBD's are created in a Ceph storage pool which is, when the pool is created, associated with the Ceph's internal "rbd" application. By convention this pool is typically called… <drum roll, please!>… "rbd". | |||

The <code>start_vm</code> script above shows how to refer to an RBD when starting <code>qemu</code>. | |||

Here are some useful commands to get you started: | |||

{|class="firstcolfixed" | |||

!Command | |||

!Description | |||

|- | |||

|qemu-img create -f raw rbd:rbd/test-disk0 50G | |||

|Create an RBD using <code>qemu-img</code> which is 50G in size (this can be expanded later) | |||

|- | |||

|ceph osd pool ls | |||

|Get a list of all Ceph storage pools | |||

|- | |||

|ceph os pool ls detail | |||

|Get full details of all Ceph storage pools including replication sizes, striping, hashing, replica distribution policy, etc. | |||

|- | |||

|rbd ls -l | |||

|Get a list of the RBD's and their sizes in the default RBD storage pool (namely "rbd") | |||

|- | |||

|rbd -h | |||

|The start of a busy morning reading documentation | |||

|} | |||

== Rebooting nodes - do so with care == | |||

The nodes in the storage cluster should not be rebooted more than one at a time. The data in the cluster is replicated across the nodes and a minimum number of replicas need to be online at all times. Taking out more than one node at a time can/(usually does) mean that insufficient replicas of the data may be available and the cluster will grind to a halt (including all the VMs relying on it). | |||

It's not a major catastrophe (depending on how you define "major") when that happens as the cluster will regroup once the nodes come back online, but VMs depending on the cluster for, say, their root filesystem will die due to built-in Linux kernel timeouts after two minutes of no disk. In this case the VM invariably needs to be rebooted. | |||

Ideally, you should follow this sequence when rebooting any node which contributes storage to the pool (i.e., storage00, storage01 and compute01): | |||

# Pick a good time, | |||

# Run <code>ceph osd set noout</code> on any node. This tells Ceph that there's going to be a temporary loss of a node and that it shouldn't start rebuilding the missing data on remaining nodes because the node is coming back soon, | |||

# Run <code>systemctl stop ceph-osd@<osdnum></code> on the node you're planning to reboot to stop each OSD running on the node, replacing <code><osdnum></code> with the relevant OSD numbers, | |||

# Wait about ten seconds and then run "ceph status" on any node and make sure Ceph isn't having conniptions due to the missing OSD's. If it simply reports that the cluster is OK and there's just some degradation then you're good to go. Yiou may need to do this a few times until Ceph settles, | |||

# Reboot the node, | |||

# Keep an eye on <code>ceph status</code> on any other node and soon after the rebooted node comes back on line the degradation should go away and there'll just the warning about the "noout", | |||

# When you've finished with the reboots, run <code>ceph osd unset noout</code> to reset the "noout" flag. <code>ceph status"</code> should say everything is OK. | |||

Latest revision as of 09:25, 11 October 2023

| File:CSE storage and compute cluster storage and virtual machines - October 2023.pdf |

The storage and compute cluster is intended as a replacement for CSE's VMware and SAN infrastructure. Its primary functions are:

- A resilient, redundant storage cluster consisting of multiple cheap[-ish] rack-mounted storage nodes running Linux and Ceph using multiple local SSD drives, and

- Multiple compute nodes running Linux and QEMU/KVM acting as both secondary storage nodes and virtual machine hosts. These compute nodes are similar in CPU count and RAM as CSE's discrete login/VLAB servers.

Important implementation considerations

- Data in the storage cluster is replicated in real-time across multiple hosts so that the failure of one or more nodes will not cause loss of data AND when any such failure occurs the cluster software (Ceph) will automatically rebuild instances of lost replicas on remaining nodes.

- Similarly, compute nodes are all similarly configured in terms of networking, CPU count and RAM and should any compute node fail, the virtual machines running on it can be migrated or restarted on another compute node without loss of functionality.

- While initially co-located in the same data centre (K17), the intention is that storage and compute nodes can be distributed across multiple data centres (especially having two located at the UNSW Kensington campus) so that a data centre failure, rather than just the failure of a single host, does not preclude restoring full operation of all hosted services.

Concept

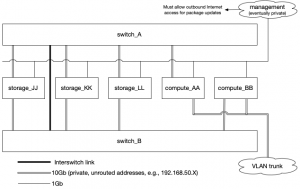

The diagram at the right shows the basic concept of the cluster.

- The primary storage and compute nodes have 10Gb network interfaces used to access and maintain the data store.

- There are two network switches providing redundancy for the storage node network traffic and ensuring that at least one compute node will have access to the data store in case of switch failure.

- Management of the cluster happens via a separate subnetwork to the data store's own traffic network.

Additional and unlimited storage and compute nodes can be added.

Of course, if/when the cluster is decentralised, the networking will have to be revisited according to the networking available at the additional data centres.

What Ceph provides

Fundamentally, Ceph provides a redundant, distributed data store on top of physical servers running Linux. Ceph itself manages replication, patrol reads/scrubbing and maintaining redundancy ensuring data remains automatically and transparently available in the face of disk, host and/or site failures.

The data is presented for use primarily, but not exclusively, as network-accessible raw block devices (RADOS Block Devices or RBD's) for use as filesystem volumes or boot devices (the latter especially by virtual machines); and as mounted network file systems (CephFS) similar to NFS. In both cases the data backing the device or file system is replicated across the cluster.

Each storage and compute node typically runs one or more Ceph daemons while other servers accessing the data stored in the cluster will typically only have the Ceph libraries and utilities installed.

The Ceph daemons are:

- mon - monitor daemon - multiple instances across multiple nodes maintaining status and raw data replica location information. Ceph uses a quorum of mon's to avoid split brain issues and thus the number of mon's should be an odd number greater than or equal to three.

- mgr - manager daemon - one required to assist the mon's. Thus, it's useful to have two in case one dies.

- mds - a metadata server for CephFS (such as file permission bits, ownership, time stamps, etc.). The more the merrier and, obviously, two or more is a good thing.

- osd - the object store daemon. One per physical disk so there can be more than one on a physical host. Handles maintaining, replicating, validating and serving the data on the disk. OSD's talk to themselves a lot.

What Ceph does not provide

Ceph tends to live in the here-and-now. Backups of the data stored in the cluster needs to managed separately. This includes disk images, particularly of virtual machines.

Ceph does support snapshots, but because Ceph has no awareness of the file systems on virtual disks just taking a Ceph snapshot of an in-use disk image is likely to create an inconsistent image that will need, at best, a file system check (fsck) to mount properly or, at worst, will simply be unusable.

Thus, there are two possible backup strategies:

- Get the virtual machines using the Ceph disk images to back up the disk data themselves (e.g.,

rsync), or - Shut down the virtual machines briefly, create a Ceph snapshot and then reboot the virtual machines.

Note that in the case of a catastrophic failure of Ceph, loss of too many nodes or sites, or an administrator doing something foolish (like deleting a disk image including its snapshots), it is wise to consider disaster recovery options that don't require a functional Ceph cluster to instantiate.

See also man fsfreeze.

What QEMU/KVM provides

- Machine virtualisation

- Limited virtual networking which supplemented by the local host's own networking/bridging/firewalling

See:

- QEMU website

- QEMU at Wikipedia

man:qemu-system-x86_64man:qemu-img

Installing and configuring the physical servers to run Linux and Ceph

Servers are Dell.

- On the RAID controller:

- Configure two disks for the boot device as RAID1 (mirrored)

- Configure the remaining data store disks as RAID0 with one single component disk each

- Use eth0 to do a network install of Debian Bullseye selecting:

- Static network addressing

- SSH Server only

- Everything installed in the one partition

- Configure timezone, keyboard, etc.

- Reboot into installed OS

- Fix/enable root login

- Change

sshd_configto includeUsePAM noPasswordAuthentication no

- Modify

/etc/default/grub:- Change

GRUB_CMDLINE_LINUX_DEFAULTfrom: "quiet"- to:

"quiet selinux=0 audit=0 ipv6.disable=1"- Then run

grub-mkconfig -o /boot/grub/grub.cfgand reboot

- Change

- Install packages:

apt-get install man-db lsof strace tcpdump iptables-persistent rsync psmiscapt-get install python2.7-minimal(for utilities in/root/bin)

- Ensure

unattended upgradesare installed and enabled (dpkg-reconfigure unattended-upgrades)

Install the Ceph software packages:

Refer to the manual installation procedures at Installation (Manual).

wget -q -O- 'https://download.ceph.com/keys/release.asc' | apt-key add -apt-add-repository 'deb https://download.ceph.com/debian-quincy/ bullseye main'apt-get install ceph

When first creating a Ceph cluster you need to the following ONCE. Once the cluster is running no further bootstrapping is required. See further down for how to ADD a mon to an already-running cluster.

- Perform Monitor Bootstrapping (Monitor bootstrapping)

- You may need to run

chown -R ceph:ceph /var/lib/ceph/mon/ceph-<host>to get the mon started the first time. systemctl enable ceph-mon@<host>

The initial /etc/ceph/ceph.conf file will look a bit like this:

[global] fsid = db5b6a5a-1080-46d2-974a-80fe8274c8ba mon initial members = storage00 mon host = 129.94.242.95 auth cluster required = cephx auth service required = cephx auth client required = cephx [mon.storage00] host = storage00 mon addr = 129.94.242.95

Set up a mgr daemon:

See also Ceph-mgr administration guide.

mkdir /var/lib/ceph/mgr/ceph-<host>ceph auth get-or-create mgr.<host> mon 'allow profile mgr' osd 'allow *' mds 'allow *' > /var/lib/ceph/mgr/ceph-<host>/keyringchown -R ceph:ceph /var/lib/ceph/mgr/ceph-<host>systemctl start ceph-mgr@<host>systemctl enable ceph-mgr@<host>

Some run time configuration related to running mixed-version Ceph environments (which we hopefully don't do):

ceph config set mon mon_warn_on_insecure_global_id_reclaim trueceph config set mon mon_warn_on_insecure_global_id_reclaim_allowed trueceph config set mon auth_allow_insecure_global_id_reclaim falseceph mon enable-msgr2

Check Ceph cluster-of-one-node status:

ceph status

Ceph software and configuration common across all storage and compute nodes

In a disaster situation, the storage and compute nodes need to be able to run without depending on any additional network services. Thus site network configuration, necessary utility scripts and any other important files are duplicated on all nodes. Principally these are:

| File name | Description | Action to take manually when changed |

|---|---|---|

| /etc/hosts | Node network names and IP addresses | |

| /etc/ceph/ceph.conf | Main Ceph configuration file. Used by all Ceph daemons and libraries, and very importantly by the mon daemons | Restart the mon daemons (one at a time, not all at once! Keep an eye on ceph status while doing so)

|

| /etc/ceph/ceph.client.admin.keyring | Access key used by tools and utilities to grant administrative access to the cluster | |

| /root/bin/ceph_* | CSG-provided utilities to maintain the cluster | |

| /etc/iptables/rules.v4 | iptables/netfilter rules to protect the cluster | Run /usr/sbin/netfilter-persistent start

|

Adding additional storage nodes

- Follow the steps above for installing and configuring Linuux up to using

apt-getto install the Ceph packages. - Update

/root/bin/ceph_distribute_support_filesand/etc/hostson a running node to include the new node. - On the same already-running node, run

/root/bin/ceph_distribute_support_files. - To add storage, select an unused OSD number and on the new node run:

ceph_create_nautilus_osd /dev/<blockdevice> <osdnum>systemctl start ceph-osd@<osdnum>systemctl enable ceph-osd@<osdnum>

Add additional mon daemons

See also Adding/removing monitors.

Note: A maximum of one mon and one mgr are allowed per server/node.

# mkdir /var/lib/ceph/mon/ceph-<hostname> # ceph auth get mon. -o /tmp/keyfile # ceph mon getmap -o /tmp/monmap # ceph-mon -i <hostname> --mkfs --monmap /tmp/monmap --keyring /tmp/keyfile # chown -R ceph:ceph /var/lib/ceph/mon/ceph-<hostname> # systemctl start ceph-mon@<hostname> # systemctl enable ceph-mon@<hostname>

Other Ceph configuration things

Set initial OSD weights. As all disks are the same size, use 1.0 until such time as other sizes are present

Examples:

ceph osd crush set osd.0 1.0 host=storage00ceph osd crush set osd.1 1.0 host=storage00ceph osd crush set osd.2 1.0 host=storage01ceph osd crush set osd.3 1.0 host=storage01ceph osd crush set osd.4 1.0 host=compute01ceph osd crush set osd.5 1.0 host=compute01

Set up the crushmap hierarchy to allow space to be allocated on a per-host basis (as opposed to per-OSD)

Not to be played with lightly. See CRUSH maps.

Example commands:

bin/ceph_show_crushmapceph osd crush move storage00 root=defaultceph osd crush move storage01 root=defaultceph osd crush move compute01 root=default

Create an RBD pool to used for RBD devices(RADOS Block Devices)

See:

ceph osd pool create rbd 128 128rbd pool init rbd

Add MDS daemons for CephFS on two nodes (so we have two of them in case one fails)

Like this:

mkdir -p /var/lib/ceph/mds/ceph-<hostname>ceph-authtool --create-keyring /var/lib/ceph/mds/ceph-<hostname>/keyring --gen-key -n mds.<hostname>ceph auth add mds.<hostname> osd "allow rwx" mds "allow *" mon "allow profile mds" -i /var/lib/ceph/mds/ceph-<hostname>/keyringchown -R ceph:ceph /var/lib/ceph/mds/ceph-<hostname>systemctl start ceph-mds@<hostname>systemctl status ceph-mds@<hostname>

Update /etc/ceph/ceph.conf and distribute.

Create the transportable "vm" file system where the qemu scripts for each VM will go

ceph osd pool create vmfs_data 128 128ceph osd pool create vmfs_metadata 128 128ceph fs new vm vmfs_metadata vmfs_data

Building a compute node

Note: Virtual machines run with their console available via VNC only via the loopback interface (127.0.0.1). Thus, one must use an SSH tunnel to access the consoles.

- Follow steps outlined above to do a basic setup of the server and install the Ceph software on it. Then,

- Follow steps 1 thru 3 in Adding additional storage nodes (above).

Run:

apt-get install qemu-system-x86 qemu-utils qemu-block-extraapt-get install bridge-utils

Set up /etc/network/interfaces. Use the following for inspiration on how to set up bridging:

# This file describes the network interfaces available on your system # and how to activate them. For more information, see interfaces(5). source /etc/network/interfaces.d/* # The loopback network interface auto lo iface lo inet loopback # The primary network interface auto eth0 iface eth0 inet static address 129.94.242.146/24 gateway 129.94.242.1 dns-nameservers 129.94.242.2 dns-search cse.unsw.edu.au # The trunk auto eth2.380 iface eth2.380 inet manual auto eth2.381 iface eth2.381 inet manual auto eth2.385 iface eth2.385 inet manual auto br380 iface br380 inet manual bridge_ports eth2.380 auto br381 iface br381 inet manual bridge_ports eth2.381 auto br385 iface br385 inet manual bridge_ports eth2.385

Configure qemu to allow virtual machines to use bridge devices

mkdir /etc/qemuecho "allow br380" > /etc/qemu/bridge.confecho "allow br381" >> /etc/qemu/bridge.confecho "allow br385" >> /etc/qemu/bridge.conf

/root/bin/ceph_mount_qemu_machines

#!/bin/bash mount -t ceph -o name=admin :/ /usr/local/qemu_machines && df -m

See /usr/local/qemu_machines.

/usr/local/qemu_machines/common/start_vm

#!/bin/bash

cd `dirname "$0"` || exit 1

if [ "a$MEM" = "a" ]; then MEM="1G"; fi

if [ "a$MAC" = "a" ]; then

MAC="56"

MAC="$MAC:`printf "%02x" $(( $RANDOM & 0xff ))`"

MAC="$MAC:`printf "%02x" $(( $RANDOM & 0xff ))`"

MAC="$MAC:`printf "%02x" $(( $RANDOM & 0xff ))`"

MAC="$MAC:`printf "%02x" $(( $RANDOM & 0xff ))`"

# MAC="$MAC:`printf "%02x" $(( $RANDOM & 0xff ))`"

fi

if [ "a$BOOT" = "a" ]; then BOOT="c"; fi

if [ "a$NICE" = "a" ]; then NICE="0"; fi

if [ "a$CPU" = "a" ]; then CPU="host"; fi

if [ "a$KVM" = "akvm" ]; then KVM="-enable-kvm -cpu $CPU"; else KVM=""; fi

if [ "a$SMP" != "a" ]; then SMP="-smp $SMP"; fi

if [ "a$MACHINE" != "a" ]; then MACHINE="-machine $MACHINE"; else MACHINE=""; fi

if [ "a$NODAEMON" = "a" ]; then DAEMONIZE="-daemonize"; else DAEMONIZE=""; fi

n=0

DISKS=""

while true; do

eval d=\$DISK$n

if [ "a$d" = "a" ]; then break; fi

if [ "a$DISKS" != "a" ]; then DISKS="${DISKS} "; fi

DISKS="${DISKS}-drive file=$d,if=virtio"

n=$(( $n + 1 ))

done

n=0

NETIFS=""

while true; do

eval d=\$NETIF$n

if [ "a$d" = "a" ]; then break; fi

if [ "a$NETIFS" != "a" ]; then NETIFS="${NETIFS} "; fi

NETIFS="${NETIFS}-netdev bridge,id=hostnet$n,br=$d -device virtio-net-pci,netdev=hostnet$n,mac=$MAC:`printf "%02x" $n`"

n=$(( $n + 1 ))

done

exec /usr/bin/nice -n $NICE \

/usr/bin/qemu-system-x86_64 \

-vnc 127.0.0.1:$NUM \

$DISKS \

$MACHINE \

$KVM \

$SMP \

$NETIFS \

-m $MEM \

-boot $BOOT \

-serial none \

-parallel none \

$EXTRAARGS \

$DAEMONIZE

exit

/usr/local/qemu_machines/debianminimal/start_vm

#!/bin/bash #BOOT="d" #EXTRAARGS="-cdrom /usr/local/qemu_machines/isos/debian-11.5.0-amd64-netinst.iso" NUM=1 KVM="kvm" DISK0="rbd:rbd/debianminimal-disk0,format=rbd" NETIF0="br385" #NODAEMON="y" . /usr/local/qemu_machines/common/start_vm

Some info about RADOS Block Devices

See Ceph block device.

RADOS Block Devices (RBD's) are network block devices created in a Ceph storage cluster which are available as block devices to clients of the cluster, typically to provide block storage -- e.g., boot disks, etc. -- to virtual machines running on hosts in the cluster.

RBD's are created in a Ceph storage pool which is, when the pool is created, associated with the Ceph's internal "rbd" application. By convention this pool is typically called… <drum roll, please!>… "rbd".

The start_vm script above shows how to refer to an RBD when starting qemu.

Here are some useful commands to get you started:

| Command | Description |

|---|---|

| qemu-img create -f raw rbd:rbd/test-disk0 50G | Create an RBD using qemu-img which is 50G in size (this can be expanded later)

|

| ceph osd pool ls | Get a list of all Ceph storage pools |

| ceph os pool ls detail | Get full details of all Ceph storage pools including replication sizes, striping, hashing, replica distribution policy, etc. |

| rbd ls -l | Get a list of the RBD's and their sizes in the default RBD storage pool (namely "rbd") |

| rbd -h | The start of a busy morning reading documentation |

Rebooting nodes - do so with care

The nodes in the storage cluster should not be rebooted more than one at a time. The data in the cluster is replicated across the nodes and a minimum number of replicas need to be online at all times. Taking out more than one node at a time can/(usually does) mean that insufficient replicas of the data may be available and the cluster will grind to a halt (including all the VMs relying on it).

It's not a major catastrophe (depending on how you define "major") when that happens as the cluster will regroup once the nodes come back online, but VMs depending on the cluster for, say, their root filesystem will die due to built-in Linux kernel timeouts after two minutes of no disk. In this case the VM invariably needs to be rebooted.

Ideally, you should follow this sequence when rebooting any node which contributes storage to the pool (i.e., storage00, storage01 and compute01):

- Pick a good time,

- Run

ceph osd set noouton any node. This tells Ceph that there's going to be a temporary loss of a node and that it shouldn't start rebuilding the missing data on remaining nodes because the node is coming back soon, - Run

systemctl stop ceph-osd@<osdnum>on the node you're planning to reboot to stop each OSD running on the node, replacing<osdnum>with the relevant OSD numbers, - Wait about ten seconds and then run "ceph status" on any node and make sure Ceph isn't having conniptions due to the missing OSD's. If it simply reports that the cluster is OK and there's just some degradation then you're good to go. Yiou may need to do this a few times until Ceph settles,

- Reboot the node,

- Keep an eye on

ceph statuson any other node and soon after the rebooted node comes back on line the degradation should go away and there'll just the warning about the "noout", - When you've finished with the reboots, run

ceph osd unset nooutto reset the "noout" flag.ceph status"should say everything is OK.